The Triforce of Deployment

Continuous delivery is great. Fortunately, so are all the tools you need to get there.

I've talked about this a number of times—including recently on Software Engineering Radio—so I thought it was finally time to write it down. (This post has been in my drafts for a while so I decided to just publish it.)

Let's assume, for now, that you're sold on continuous delivery. You think it sounds great. You'd love to deploy small, safe changes, several times a day. You love reducing risk, attributing effects to obvious causes, and using ownership to increase quality. Sounds fantastic! But right now, you're on a periodic—or worse, feature-based—release schedule. How do you get from here to there?

The Triforce of Deployment is a model of the three areas you need to line up to be in a place where you can comfortably start deploying continuously:

- Wisdom: insight into the system,

- Courage: confidence derived from a number of sources, and

- Power: a set of new tools and practices.

Fortunately, all of these things are, by themselves, good things. So even if you aim for continuous delivery and miss, you'll end up in a much better place.

Wisdom

Insight into the current state of the system, in real-time, is incredibly powerful. There are any number of ways to approach it, and you'll need to pick what's right for you. At a minimum, you'll want three things:

- observability of real-time network, machine, and application metrics—the latter will absolutely mean changes to the codebase to instrument events you care about;

- log aggregation; and

- feedback loops.

Fortunately, there are a number of companies and open source projects to help do this. You can run things like StatsD or Prometheus for metrics, and rsyslog for logging aggregation, or you can buy solutions like Datadog, Splunk, X-Ray, Honeycomb, or plenty of others.

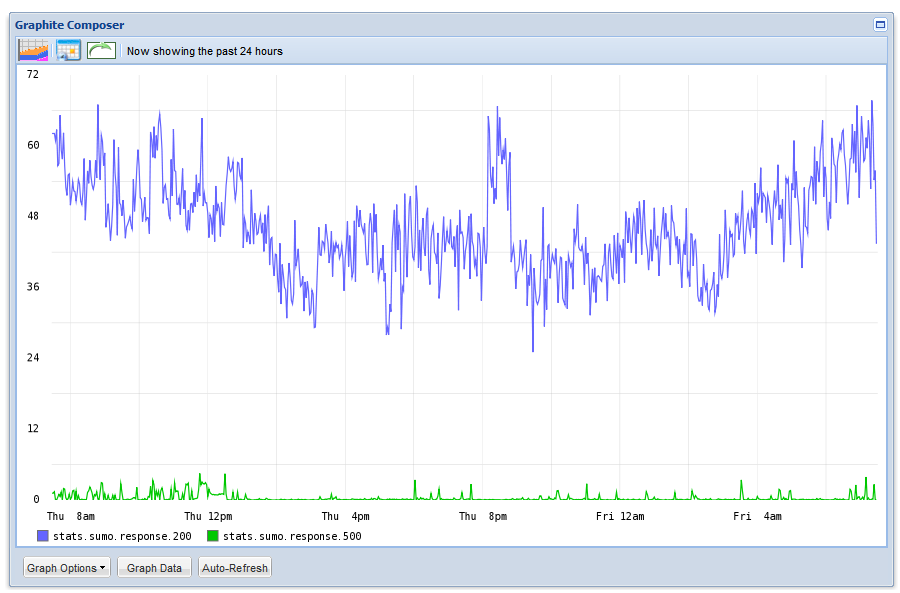

The important part is that these metrics are near real-time, that means latency below one minute. You need to be able to correlate changes in application performance to each deploy.

At first this might only be possible by manually watching graphs and log output for a few minutes after deploys. But once you're measuring these things, you can turn them into automated, pushed alerts. (I tend to keep looking at the graphs anyway. It's always nice to see a number start moving the right way.) Measuring is the first step to monitoring and alerting.

Feedback loops start at home: can everyone in your company file a bug? Can everyone alert someone if something is down? Make it easy. Don't worry about duplicates, or overly-complicated process on the bug reporting form. Clean those up later. Create an email alias for raising issues, and make sure people save it in their phones.

In particular, make sure that there's a good pipeline between any customer-facing parts of the company, whether it be support/success, social, sales, etc, and the bug reporting mechanisms.

Courage

Courage can be the hardest to get, especially if your deploys are big, complicated, risky affairs. If quality is low and hot-fixes are common, why would you be confident?

Appropriate automated test coverage (whether unit, integration, end-to-end, or usually a mix of several) is a huge step toward confidence. Automated tests won't catch every bug, but they are an incredibly effective filter for a large set of potential bugs.

It's important that writing and running tests be on the happy, easy path for developers (and if it's not happy or easy, then at least on the critical path). That means the test suite must be fast, flaky tests must be fixed or excised, writing new tests must be required* for new code.

Monitoring and alerting on application metrics can round out gaps in a test suite as part of an overall plan for confidence in production. (You still need tests to catch things before they go to prod.)

Processes are also a source of confidence.

If you aren't deploying often, it's scary. Deploy more often, even if it's not continuous. If you're deploying monthly, or irregularly, try for weekly. The more often you deploy, the less scary it will be—and the less risk from accumulated changes.

A blame-less culture will give your team the space to address systematic and process issues, which will make things smoother, while building personal confidence that they won't, for example, be fired or reprimanded for honest mistakes.

Power

A couple of key tools super-charge an engineering team. They're also must-haves for continuous deployments.

The more frequently you do deploys, the more someone will want to turn it into a script. Let them! That's your first tool.

As deployments become more frequent, the opportunity for human error following a script increases. Humans are bad at doing the same set of steps repetitively without fail, but computers are great at it, so automating the deployment process—even if it's kicked off manually—is critical. As much as possible, try to co-locate your deployment script with the code, have it live in the same repo. Deployment changes should be subject to review, and may need to be coordinated with code or other build changes, anyway.

There are a number of SaaS Continuous Integration tools—things like GitHub Actions, CircleCI, BuildKite, etc—out there, that let you take these automated steps and execute them, or you can host your own open- or closed-source versions. Not only will your deploys be faster and easier, but you'll be more confident that they were executed correctly.

The other critical tool is a feature flagging system. You need to be able to merge and deploy code that isn't working yet.

This can be a library like Waffle, a service like LaunchDarkly, or even, to start, something as simple as environment variables. While a full-featured system will give you so much more control, confidence, and power in the long run, including the ability to run tests in production with live traffic, the minimum viable flag is the ability to turn off code that isn't ready yet, and separate turning it on from changing the code itself. (That is, running the exact same build artifact or version with different configuration should be able to hit either code path.)

Feature flags allow you to continuously integrate small, incomplete changes, which is a prerequisite for safe continuous delivery. They require a change to how the team, including not just engineering but often product and QA, thinks about writing code and developing features. But once they get a taste for it, they won't want to turn back. Just say no to multiple integration branches!